It was a great deep dive into how OCI integrates with other providers to create seamless, high-performance environments.

Oracle, MySQL, Postgres, AWS, GCP, AI & ML Board

Monday, December 22, 2025

Completed Oracle Cloud Infrastructure 2025 Certified Multicloud Architect Professional

It was a great deep dive into how OCI integrates with other providers to create seamless, high-performance environments.

Wednesday, June 11, 2025

GCP: PostgreSQL Replication Slot

What is a PostgreSQL Replication Slot?

Why Do We Use Replication Slots?

- Reliable Streaming Replication: They prevent WAL segments from being deleted before a replica has received them. This ensures data consistency and avoids data loss during replication.

- Support for Logical Replication: In logical replication (e.g., streaming data to a different schema or database), slots track the replication progress.

- Resilient Replica Recovery: If a replica is temporarily down, WAL files are preserved until the replica reconnects and catches up.

1. Physical Replication Slot:

* Used in streaming replication.

* Tied to the physical binary structure of the database.

2. Logical Replication Slot:

* Used in logical replication (e.g., pub/sub model).

* Works at the level of SQL changes (INSERT/UPDATE/DELETE).

Privileges Needed for Replication Slots

To create or drop replication slots, a user needs:

* Superuser privileges OR

* The REPLICATION role attribute.

Required privileges for:

* Creating a replication slot: pg_create_physical_replication_slot() or pg_create_logical_replication_slot()

* Dropping a slot: pg_drop_replication_slot()

Common Errors and Resolutions:

ERROR: replication slot <name> is already active

ERROR: could not write to file "pg_wal/...": No space left on device Cause: WALs are retained because the slot is not being consumed

ERROR: replication slot "<slot>" does not exist Cause: Trying to access a non-existent slot

Resolution: Verify the slot name or recreate the replication slot

ERROR: Logical replication slot <name> inactive for too long Cause: The subscriber hasn't connected in a while

Resolution: Investigate the subscriber or consider dropping the slot

Managing Replication Slots:

SELECT * FROM pg_create_physical_replication_slot('my_physical_slot');

Create Logical Slot:

SELECT * FROM pg_create_logical_replication_slot('my_logical_slot', 'pgoutput');

List All Slots:

SELECT * FROM pg_replication_slots;

Drop a Slot:

SELECT pg_drop_replication_slot('my_slot');

Best Practices:

- Monitor Slot Activity: Use pg_replication_slots to ensure slots are being consumed.

- Avoid Orphan Slots: Unused slots can fill the disk by retaining WALs. Drop them if not needed.

- Configure Monitoring/Alerts: Watch for lagging replicas or inactive slots.

- Use Logical Decoding Carefully: Logical slots can grow WALs fast if the subscriber is slow.

A diagram showing how replication slots work in a streaming or logical setup:

Wednesday, October 16, 2024

Oracle Enterprise Manager - OMS 13.5 patching

In this blog post, I am excited to guide you through the process of patching Oracle Enterprise Manager OMS 13.5 to the latest RU23 patch. Following these steps will ensure you have the most secure and efficient version for optimal performance.

[oracle@sajidserver OMSPatcher]$ ./omspatcher apply /usr/patches/36494040 -analyze

OMSPatcher Automation Tool

Copyright (c) 2017, Oracle Corporation. All rights reserved.

OMSPatcher version : 13.9.5.21.0

OUI version : 13.9.4.0.0

Running from : /usr/middleware

Log file location : /usr/middleware/cfgtoollogs/omspatcher/opatch2024-10-07_14-17-30PM_1.log

WARNING:Apply the 12.2.1.4.0 version of the following JDBC Patch(es) on OMS Home before proceeding with patching.

1.MLR patch 35430934(or its superset),which includes bugs 32720458 and 33607709

2.Patch 31657681

3.Patch 34153238

OMSPatcher log file: /usr/middleware/cfgtoollogs/omspatcher/36494040/omspatcher_2024-10-16PM_analyze.log

Please enter OMS weblogic admin server URL(t3s://sajidserver :7114):>

Please enter OMS weblogic admin server username(weblogic):> weblogic

Please enter OMS weblogic admin server password:>

Enter DB user name : sys

Enter 'SYS' password :

Checking if current repository database is a supported version

Current repository database version is supported

Prereq "checkComponents" for patch 36487738 passed.

Prereq "checkComponents" for patch 36329231 passed.

Prereq "checkComponents" for patch 35582217 passed.

Prereq "checkComponents" for patch 36487989 passed.

Prereq "checkComponents" for patch 36487743 passed.

Prereq "checkComponents" for patch 36487747 passed.

Prereq "checkComponents" for patch 36487829 passed.

Configuration Validation: Success

Running apply prerequisite checks for sub-patch(es) "35582217,36487738,36487747,36487989,36487829,36487743,36329231" and Oracle Home "/usr/middleware"...

Sub-patch(es) "35582217,36487738,36487747,36487989,36487829,36487743,36329231" are successfully analyzed for Oracle Home "/usr/middleware"

Complete Summary

================

All log file names referenced below can be accessed from the directory "/usr/middleware/cfgtoollogs/omspatcher/2024-10-16PM_SystemPatch_36494040_1"

Prerequisites analysis summary:

-------------------------------

The following sub-patch(es) are applicable:

Featureset Sub-patches Log file

---------- ----------- --------

oracle.sysman.top.oms 35582217,36487738,36487747,36487989,36487829,36487743,36329231 35582217,36487738,36487747,36487989,36487829,36487743,36329231_opatch2024-10-07_14-18-40PM_1.log

The following sub-patches are incompatible with components installed in the OMS system:

34430509,34706773,36487761,35854914,36161799,36329046,36487844,36329220

--------------------------------------------------------------------------------

The following warnings have occurred during OPatch execution:

1) Apply the 12.2.1.4.0 version of the following JDBC Patch(es) on OMS Home before proceeding with patching.

1.MLR patch 35430934(or its superset),which includes bugs 32720458 and 33607709

2.Patch 31657681

3.Patch 34153238

--------------------------------------------------------------------------------

Log file location: /usr/omspatcher/36494040/omspatcher_2024-10-07_14-17-34PM_analyze.log

OMSPatcher succeeded.

##########

Resolution:

##########

To ensure the successful application of the patch, please adhere to the following steps:

1. Install the most recent three MLR patches:

- MLR patch 35430934

- Patch 31657681

- Patch 34153238

2. Remove and then install the EMDiag Kit from the OEM repository.

Upon completion of these procedures, the OMSPatcher should be executed successfully.

[oracle@sajidserver OMSPatcher]$ /usr/OMSPatcher/omspatcher apply /usr/patches/36494040 OMSPatcher.OMS_DISABLE_HOST_CHECK=true -invPtrLoc /usr/middleware/oraInst.loc

OMSPatcher Automation Tool

Copyright (c) 2017, Oracle Corporation. All rights reserved.

OMSPatcher version : 13.9.5.21.0

OUI version : 13.9.4.0.0

Running from : /usr/middleware

Log file location : /usr/middleware/cfgtoollogs/omspatcher/opatch2024-08-11_10-24-05AM_1.log

OMSPatcher log file: /u01/app/oracle/oms13cr5/middleware/cfgtoollogs/omspatcher/36494040/omspatcher_2024-08-11_10-24-11AM_apply.log

Please enter OMS weblogic admin server URL(t3s://sajidserver:7114):>

Please enter OMS weblogic admin server username(weblogic):>

Please enter OMS weblogic admin server password:>

Enter DB user name : sys

Enter 'sys' password :

Checking if current repository database is a supported version

Current repository database version is supported

Prereq "checkComponents" for patch 36487738 passed.

Prereq "checkComponents" for patch 36329231 passed.

Prereq "checkComponents" for patch 35582217 passed.

Prereq "checkComponents" for patch 36487989 passed.

Prereq "checkComponents" for patch 36487743 passed.

Prereq "checkComponents" for patch 36487747 passed.

Prereq "checkComponents" for patch 36487829 passed.

Configuration Validation: Success

Running apply prerequisite checks for sub-patch(es) "35582217,36487738,36487747,36487989,36487829,36487743,36329231" and Oracle Home "/

Sub-patch(es) "35582217,36487738,36487747,36487989,36487829,36487743,36329231" are successfully analyzed for Oracle Home

To continue, OMSPatcher will do the following:

[Patch and deploy artifacts] : Apply sub-patch(es) [ 35582217 36329231 36487738 36487743 36487747 36487829 36487989 ]

Register MRS artifact "commands";

Register MRS artifact "omsPropertyDef";

Register MRS artifact "targetType";

Register MRS artifact "chargeback";

Register MRS artifact "default_collection";

Register MRS artifact "assoc";

Register MRS artifact "jobTypes";

Register MRS artifact "systemStencil";

Register MRS artifact "procedures";

Register MRS artifact "discovery";

Register MRS artifact "EcmMetadataOnlyRegistration";

Register MRS artifact "swlib";

Register MRS artifact "namedQuery";

Register MRS artifact "TargetPrivilege";

Register MRS artifact "CredStoreMetadata";

Register MRS artifact "storeTargetType";

Register MRS artifact "gccompliance";

Register MRS artifact "SecurityClassManager";

Register MRS artifact "OutOfBoxRole";

Register MRS artifact "report";

Register MRS artifact "namedsql";

Register MRS artifact "runbooks";

Register MRS artifact "derivedAssocs"

Do you want to proceed? [y|n]

y

User Responded with: Y

Stopping the OMS.....

Please monitor log file: /usr/middleware/cfgtoollogs/omspatcher/2024-08-11_10-33-25AM_SystemPatch_36494040_9/stop_o

|| || || || ||

|| || || || ||#######################

|| || || || || Output Truncated

|| || || || || ######################

|| || || || ||

|| || || || ||

|| || || || ||

\\ //

\\ //

\\ //

\\ //

\\ //

\\//

Deployment summary:

-------------------

The following artifact(s) have been successfully deployed:

Artifacts Log file

--------- --------

SQL rcu_applypatch_original_patch_2024-08-11_10-41-18AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-45-35AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-46-02AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-46-29AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-46-59AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-47-33AM.log

SQL rcu_applypatch_original_patch_2024-08-11_10-48-34AM.log

MRS-commands emctl_register_commands_2024-08-11_10-49-17AM.log

MRS-commands emctl_register_commands_2024-08-11_10-50-00AM.log

MRS-commands emctl_register_commands_2024-08-11_10-50-34AM.log

MRS-omsPropertyDef emctl_register_omsPropertyDef_2024-08-11_10-51-08AM.log

MRS-omsPropertyDef emctl_register_omsPropertyDef_2024-08-11_10-51-44AM.log

MRS-omsPropertyDef emctl_register_omsPropertyDef_2024-08-11_10-52-19AM.log

MRS-targetType emctl_register_targetType_2024-08-11_10-52-53AM.log

MRS-targetType emctl_register_targetType_2024-08-11_10-54-14AM.log

MRS-targetType emctl_register_targetType_2024-08-11_10-57-28AM.log

MRS-targetType emctl_register_targetType_2024-08-11_10-58-56AM.log

MRS-targetType emctl_register_targetType_2024-08-11_11-08-30AM.log

MRS-chargeback emctl_register_chargeback_2024-08-11_11-09-39AM.log

MRS-chargeback emctl_register_chargeback_2024-08-11_11-10-13AM.log

MRS-default_collection emctl_register_default_collection_2024-08-11_11-10-46AM.log

MRS-default_collection emctl_register_default_collection_2024-08-11_11-11-41AM.log

MRS-default_collection emctl_register_default_collection_2024-08-11_11-13-51AM.log

MRS-default_collection emctl_register_default_collection_2024-08-11_11-14-41AM.log

MRS-default_collection emctl_register_default_collection_2024-08-11_11-16-53AM.log

MRS-assoc emctl_register_assoc_2024-08-11_11-17-41AM.log

MRS-assoc emctl_register_assoc_2024-08-11_11-18-16AM.log

MRS-assoc emctl_register_assoc_2024-08-11_11-18-50AM.log

MRS-jobTypes emctl_register_jobTypes_2024-08-11_11-19-25AM.log

MRS-jobTypes emctl_register_jobTypes_2024-08-11_11-20-02AM.log

MRS-jobTypes emctl_register_jobTypes_2024-08-11_11-20-37AM.log

MRS-jobTypes emctl_register_jobTypes_2024-08-11_11-21-15AM.log

MRS-systemStencil emctl_register_systemStencil_2024-08-11_11-21-50AM.log

MRS-systemStencil emctl_register_systemStencil_2024-08-11_11-22-25AM.log

MRS-systemStencil emctl_register_systemStencil_2024-08-11_11-22-59AM.log

MRS-systemStencil emctl_register_systemStencil_2024-08-11_11-23-38AM.log

MRS-procedures emctl_register_procedures_2024-08-11_11-24-15AM.log

MRS-procedures emctl_register_procedures_2024-08-11_11-24-54AM.log

MRS-discovery emctl_register_discovery_2024-08-11_11-25-29AM.log

MRS-EcmMetadataOnlyRegistration emctl_register_EcmMetadataOnlyRegistration_2024-08-11_11-26-04AM.log

MRS-EcmMetadataOnlyRegistration emctl_register_EcmMetadataOnlyRegistration_2024-08-11_11-26-42AM.log

MRS-EcmMetadataOnlyRegistration emctl_register_EcmMetadataOnlyRegistration_2024-08-11_11-27-28AM.log

MRS-EcmMetadataOnlyRegistration emctl_register_EcmMetadataOnlyRegistration_2024-08-11_11-28-04AM.log

MRS-EcmMetadataOnlyRegistration emctl_register_EcmMetadataOnlyRegistration_2024-08-11_11-28-40AM.log

MRS-swlib emctl_register_swlib_2024-08-11_11-29-17AM.log

MRS-swlib emctl_register_swlib_2024-08-11_11-29-55AM.log

MRS-swlib emctl_register_swlib_2024-08-11_11-30-30AM.log

MRS-swlib emctl_register_swlib_2024-08-11_11-31-06AM.log

MRS-swlib emctl_register_swlib_2024-08-11_11-31-47AM.log

MRS-namedQuery emctl_register_namedQuery_2024-08-11_11-32-22AM.log

MRS-namedQuery emctl_register_namedQuery_2024-08-11_11-32-57AM.log

MRS-namedQuery emctl_register_namedQuery_2024-08-11_11-33-32AM.log

MRS-TargetPrivilege emctl_register_TargetPrivilege_2024-08-11_11-34-09AM.log

MRS-CredStoreMetadata emctl_register_CredStoreMetadata_2024-08-11_11-34-48AM.log

MRS-storeTargetType emctl_register_storeTargetType_2024-08-11_11-35-22AM.log

MRS-storeTargetType emctl_register_storeTargetType_2024-08-11_11-35-57AM.log

MRS-storeTargetType emctl_register_storeTargetType_2024-08-11_11-36-40AM.log

MRS-storeTargetType emctl_register_storeTargetType_2024-08-11_11-37-16AM.log

MRS-storeTargetType emctl_register_storeTargetType_2024-08-11_11-37-57AM.log

MRS-gccompliance emctl_register_gccompliance_2024-08-11_11-38-33AM.log

MRS-SecurityClassManager emctl_register_SecurityClassManager_2024-08-11_11-47-05AM.log

MRS-OutOfBoxRole emctl_register_OutOfBoxRole_2024-08-11_11-47-39AM.log

MRS-report emctl_register_report_2024-08-11_11-48-14AM.log

MRS-namedsql emctl_register_namedsql_2024-08-11_11-48-49AM.log

MRS-runbooks emctl_register_runbooks_2024-08-11_11-49-22AM.log

MRS-derivedAssocs emctl_register_derivedAssocs_2024-08-11_11-49-57AM.log

MRS-derivedAssocs emctl_register_derivedAssocs_2024-08-11_11-50-34AM.log

MRS-derivedAssocs emctl_register_derivedAssocs_2024-08-11_11-51-08AM.log

Log file location: /usr/omspatcher/36494040/omspatcher_2024-08-11_10-24-11AM_apply.log

OMSPatcher succeeded.

[oracle@sajidserver OMSPatcher]$ emctl status oms

Oracle Enterprise Manager Cloud Control 13c Release 5

Copyright (c) 1996, 2021 Oracle Corporation. All rights reserved.

WebTier is Up

Oracle Management Server is Up

JVMD Engine is Up

Friday, February 16, 2024

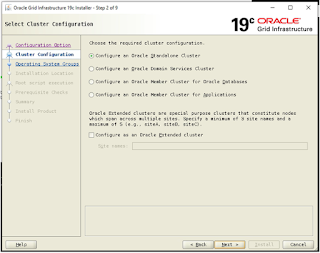

Oracle RAC 19c Installation on Google Cloud Platform

The following discourse details the proceedings of installing Oracle 19c Grid infrastructure on the Google Cloud VMware Engine (GCVE) platform. To access the most current version of Oracle Grid and Database, navigate to the hyperlink https://www.oracle.com/database/technologies/oracle19c-linux-downloads.html. Upon completion of the download and staging of the software on the server, the next step involves ensuring that the operating system requirements are satisfactory. This can be achieved by downloading the relevant operating system specifications from the hyperlink https://docs.oracle.com/en/database/oracle/oracle-database/19/ladbi/supported-red-hat-enterprise-linux-8-distributions-for-x86-64.html#GUID-B1487167-84F8-4F8D-AC31-A4E8F592374B.

It is vital to create the appropriate ASM disk groups before initiating the installation process. Once the disk groups have been successfully created, the installation process can commence.

cd /$GRID_HOME/

./gridSetup.sh

At this juncture, it can be ascertained that the Clusterware is operational and functioning optimally. The installation of the Oracle Grid cluster on the Google Cloud VMware Engine (GCVE) platform has been successfully executed.

Friday, January 5, 2024

GCP Interview Questions

GCP is a suite of cloud computing services provided by Google. It offers various services, including computing power, storage, and databases, as well as machine learning, data analytics, and networking services. GCP enables organizations to build, deploy, and scale applications efficiently in the cloud.

2. Explain the key components of GCP.

Compute Engine: Provides virtual machines (VMs) for running applications.

App Engine: A platform-as-a-service (PaaS) offering for building and deploying applications without managing the underlying infrastructure.

Kubernetes Engine: A managed Kubernetes service for container orchestration.

Cloud Storage: Object storage service for storing and retrieving data.

BigQuery: Serverless data warehouse for analytics.

Cloud SQL: Managed relational database service.

Cloud Pub/Sub: Messaging service for building event-driven systems.

Cloud Spanner: Globally distributed, horizontally scalable database.

3. Explain the difference between Compute Engine and App Engine.

Compute Engine: Infrastructure as a Service (IaaS) offering that provides virtual machines. Users have full control over the VMs, including the operating system and software configurations.

App Engine: Platform as a Service (PaaS) offering that abstracts away the infrastructure details. Developers focus on writing code, and Google manages the underlying infrastructure, automatically scaling as needed.

4. What is Kubernetes, and how does GCP support it?

Kubernetes: An open-source container orchestration platform for automating the deployment, scaling, and management of containerized applications.

GCP Kubernetes Engine: A managed Kubernetes service that simplifies the deployment and operation of Kubernetes clusters. It automates tasks like cluster provisioning, scaling, and upgrades.

5. Explain Cloud Storage Classes in GCP.

Standard: General-purpose storage with high performance and low latency.

Nearline: Designed for data accessed less frequently but requires low latency when accessed.

Coldline: Suited for archival data with infrequent access.

Archive: Lowest-cost option for long-term storage with rare access.

6. How does Cloud Identity and Access Management (IAM) work in GCP?

IAM: Manages access control by defining roles and assigning them to users or groups.

Roles: Define permissions, and users are granted those roles.

Principals: Entities that can request access, such as users, groups, or service accounts.

7. Explain Google Cloud Pub/Sub.

Pub/Sub: A messaging service for building event-driven systems. Publishers send messages to topics, and subscribers receive messages from subscriptions to those topics.

Topics: Channels for publishing messages.

Subscriptions: Named resources representing the stream of messages from a single, specific topic.

8. What is Google Cloud BigQuery, and how is it different from traditional databases?

BigQuery: A fully managed, serverless data warehouse for analytics. It enables super-fast SQL queries using the processing power of Google's infrastructure.

Differences: BigQuery is designed for analytical workloads and can handle massive datasets with high concurrency, while traditional databases are often optimized for transactional workloads.

9. Explain the concept of Virtual Private Cloud (VPC) in GCP.

VPC: A private network for GCP resources. It provides isolation, segmentation, and control over the network environment.

Subnets: Segments of the IP space within a VPC, allowing for further network isolation.

Firewall Rules: Control traffic to and from instances.

10. What are Cloud Functions in GCP?

Cloud Functions: Serverless compute service that allows you to run event-triggered functions without provisioning or managing servers.

Event Sources: Triggers for Cloud Functions, such as changes in Cloud Storage, Pub/Sub messages, or HTTP requests.

11. What is Stackdriver in GCP?

Stackdriver: Stackdriver is a comprehensive observability suite in GCP that includes logging, monitoring, trace analysis, and error reporting. It provides tools for developers and operators to gain insights into the performance, availability, and overall health of their applications.

12. Explain Stackdriver Logging.

Stackdriver Logging: A fully-managed logging service that allows you to store, search, analyze, and alert on log data. It collects log entries from applications and infrastructure and provides a centralized location for log management.

13. What are log entries in Stackdriver Logging?

Log Entries: Records of events generated by GCP resources. Each log entry has a timestamp, severity level, log name, and payload containing specific information about the event.

14. How can you view logs in Stackdriver Logging?

Stackdriver Console: You can view logs interactively in the Stackdriver Logging console. It provides a user-friendly interface to search, filter, and analyze logs.

15. Explain the concept of Log Severity Levels in Stackdriver Logging.

Severity Levels: Indicate the importance of a log entry. Levels include DEBUG, INFO, NOTICE, WARNING, ERROR, and CRITICAL. Setting and using severity levels helps in identifying and addressing issues effectively.

16. What is Stackdriver Monitoring?

Stackdriver Monitoring: A service that provides visibility into the performance, uptime, and overall health of applications and services. It includes dashboards, alerting policies, and metrics collection.

17. Explain Stackdriver Dashboards.

Dashboards: Customizable visual displays that allow users to aggregate and display metrics and charts for monitoring purposes. Dashboards can include charts, text widgets, and predefined components.

18. How does Stackdriver Monitoring use Metrics?

Metrics: Quantitative measurements representing the behavior of a system over time. Stackdriver Monitoring collects and stores metrics that help in understanding the performance and health of resources.

19. What is an Alert Policy in Stackdriver Monitoring?

Alert Policy: Defines conditions for triggering alerts based on specified metrics and thresholds. When conditions are met, notifications can be sent via various channels like email, SMS, or third-party integrations.

20. Explain the integration of Stackdriver Trace with Logging and Monitoring.

Stackdriver Trace: A distributed tracing service that allows you to trace the performance of requests as they travel through your application.

Integration: Trace data can be correlated with logs and monitoring metrics in Stackdriver, providing a comprehensive view of the application's behavior.

21. How can you export logs from Stackdriver Logging?

Export Sinks: You can export logs to other Google Cloud services, Cloud Storage, or external systems using export sinks. This allows for archiving, analysis, and integration with third-party tools.

22. Explain the concept of Metrics Explorer in Stackdriver Monitoring.

Metrics Explorer: A tool in the Stackdriver Monitoring console that allows users to explore and visualize metrics data. It provides a flexible interface for creating custom charts and analyzing metric data.

23. How does Stackdriver handle autoscaling in GCP?

Autoscaler: Stackdriver provides autoscaling policies that use metrics to dynamically adjust the number of instances in a managed instance group. This ensures optimal utilization of resources based on demand.

24. What is the purpose of Stackdriver Error Reporting?

Error Reporting: Automatically detects and aggregates errors produced by applications. It provides insights into the frequency and impact of errors, helping identify and resolve issues.

25. How can you set up alerting in Stackdriver Monitoring?

Alerting Policies: You can create alerting policies in Stackdriver to define conditions for triggering alerts. These policies can be associated with specific resources, and notifications can be configured for various channels.

Wednesday, November 1, 2023

Autonomous Health Framework (AHF) Installation

[root@sajidserver01 tfa]# unzip AHF-LINUX_v23.9.0.zip

replace ahf_setup? [y]es, [n]o, [A]ll, [N]one, [r]ename: A

inflating: ahf_setup

extracting: ahf_setup.dat

inflating: README.txt

inflating: oracle-tfa.pub

[root@sajidserver01 tfa]# ./ahf_setup -local

AHF Installer for Platform Linux Architecture x86_64

AHF Installation Log : /tmp/ahf_install_239000.log

Starting Autonomous Health Framework (AHF) Installation

AHF Version: 23.9.0 Build Date: 202310

AHF is already installed at /usr/tfa/oracle.ahf

Installed AHF Version: 23.4.2 Build Date: 202305

Do you want to upgrade AHF [Y]|N : Y

Upgrading /usr/tfa/oracle.ahf

Shutting down AHF Services

Upgrading AHF Services

Beginning Retype Index

TFA Home: /usr/tfa/oracle.ahf/tfa

Moving existing indexes into temporary folder

Index file for index moved successfully

Index file for index_metadata moved successfully

Index file for complianceindex moved successfully

Moved indexes successfully

Starting AHF Services

No new directories were added to TFA

Directory /usr/grid/crsdata/sajidserver01/trace/chad was already added to TFA Directories.

Do you want AHF to store your My Oracle Support Credentials for Automatic Upload ? Y|[N] : N

.-----------------------------------------------------------------.

| Host | TFA Version | TFA Build ID | Upgrade Status |

+----------+-------------+-----------------------+----------------+

| Sajidserver01 | 23.9.0.0.0 | 23090002023| UPGRADED |

| Sajidserver02 | 23.9.0.0.0 | 23090002023| UPGRADED |

'----------+-------------+-----------------------+----------------'

Setting up AHF CLI and SDK

AHF is successfully upgraded to latest version.

[root@sajidserver01 bin]# ./tfactl status

.--------------------------------------------------------------------------------------------------.

| Host | Status of TFA | PID | Port | Version | Build ID | Inventory Status |

+----------+---------------+--------+------+------------+-----------------------+------------------+

| sajidserver01 | RUNNING | 368155 | 8200 | 23.9.0.0.0 | 23090002023| COMPLETE |

| sajidserver02 | RUNNING | 942953 | 8200 | 23.9.0.0.0 | 23090002023 | COMPLETE |

'----------+---------------+--------+------+------------+----

[root@sajidserver01 bin]# ./tfactl toolstatus

Running command tfactltoolstatus on sajidserver01 ...

.------------------------------------------------------------------.

| TOOLS STATUS - HOST : sajidserver01 |

+----------------------+--------------+--------------+-------------+

| Tool Type | Tool | Version | Status |

+----------------------+--------------+--------------+-------------+

| AHF Utilities | alertsummary | 23.0.9 | DEPLOYED |

| | calog | 23.0.9 | DEPLOYED |

| | dbglevel | 23.0.9 | DEPLOYED |

| | grep | 23.0.9 | DEPLOYED |

| | history | 23.0.9 | DEPLOYED |

| | ls | 23.0.9 | DEPLOYED |

| | managelogs | 23.0.9 | DEPLOYED |

| | menu | 23.0.9 | DEPLOYED |

| | param | 23.0.9 | DEPLOYED |

| | ps | 23.0.9 | DEPLOYED |

| | pstack | 23.0.9 | DEPLOYED |

| | summary | 23.0.9 | DEPLOYED |

| | tail | 23.0.9 | DEPLOYED |

| | triage | 23.0.9 | DEPLOYED |

| | vi | 23.0.9 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Development Tools | oratop | 14.1.2 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Support Tools Bundle | darda | 2.10.0.R6036 | DEPLOYED |

| | oswbb | 22.1.0AHF | RUNNING |

| | prw | 12.1.13.11.4 | RUNNING |

'----------------------+--------------+--------------+-------------'

Note :-

DEPLOYED : Installed and Available - To be configured or run interactively.

NOT RUNNING : Configured and Available - Currently turned off interactively.

RUNNING : Configured and Available.

[root@sajidserver01 bin]# ./tfactl -help

Usage : /usr/19.0.0/grid/bin/tfactl <command> [options]

commands:diagcollect|analyze|ips|run|start|stop|enable|disable|status|print|access|purge|directory|host|set|toolstatus|uninstall|diagnosetfa|syncnodes|upload|availability|rest|events|search|changes|isa|blackout|rediscover|modifyprofile|refreshconfig|get|version|floodcontrol|queryindex|index|purgeindex|purgeinventory|set-sslconfig|set-ciphersuite|collection

Wednesday, October 4, 2023

Mysqldump: Error 2020: Got packet bigger than 'max_allowed_packet'

When utilizing mysqldump utility for database backup, it is possible to encounter an error as mentioned below when dealing with a database of considerable size.

mysqldump: Error 2020: Got packet bigger than 'max_allowed_packet' bytes when dumping table `log` at row:

To achieve a successful backup, it is recommended to utilize the command "--max_allowed_packet=1024M" before executing the mysqldump utility, as this will address the issue at hand.

[mysql@Sajidserver ~]$

You can even edit the my.cnf file with max_allowed_packet=1024M save it and run the backup normally.

Thursday, July 13, 2023

Machine Learning

Machine learning is a branch of artificial intelligence that provides computers with the ability to learn without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it to learn for themselves.

Machine learning is the study of algorithms that modify their behavior as they process new data.

Machine learning algorithms are used in many areas, including but not limited to:

2. Natural Language Processing (NLP)

3. Robotics

4. Facial Recognition

5. Stock Market Analysis

Machine Learning is the science of getting computers to act without being explicitly programmed.

Machine learning algorithms can be broken down into two categories: supervised and unsupervised. Supervised learning algorithms use input data that has been labeled by a human to train the machine, while unsupervised learning algorithms do not have this labeled data and instead look for patterns in the raw data itself.

Supervised learning algorithms can be further divided into regression and classification models. Regression models are used to predict continuous values, such as stock prices over time or how quickly a car will drive on a highway, while classification models are used to predict discrete values, such as whether or not someone has cancer or if a person has purchased something on a website before.

The two main approaches to machine learning are supervised and unsupervised learning. In supervised learning, the data has an underlying structure that is known to a human. This data can be labeled (that is, associated with a label indicating its true value) or unlabeled (no labels are given to indicate what the correct answers are). In unsupervised learning, the data does not have an underlying structure that is known to a human. Instead, algorithms can be used to group together items based on their similarity.

Machine learning algorithms can be grouped into three broad categories linear methods, non-linear methods and kernel methods. Linear methods include classification and regression. Non-linear methods include clustering and anomaly detection kernel methods including support vector machines and Gaussian processes.

Monday, June 26, 2023

Change Oracle Database Compatible Parameter in Primary and Standby Servers

To change the compatibility parameter to 19.0.0.0 on both Primary and Standby servers, follow the below steps. Please note that this process requires database downtime. Begin by changing the compatibility in Standby, followed by the Primary server.

SQL> SELECT value FROM v$parameter WHERE name = 'compatible';

VALUE

-------------------------------------------------------------

12.2.0

ALTER SYSTEM SET COMPATIBLE= '19.0.0.0' SCOPE=SPFILE SID='*';

Bounce the Standby database in the mounted state and restart the Managed Recovery Process.

[oracle@sajidserver01 ~]$ srvctl stop database -d sajid_texas

[oracle@sajidserver01 ~]$ srvctl start database -d sajid_texas -o mount

alter database recover managed standby database disconnect from session;

Now change the compatibility on the Primary database, make sure you get a proper rman backup of your database before doing it. If you want to revert back to the compatibility.

ALTER SYSTEM SET COMPATIBLE= '19.0.0.0' SCOPE=SPFILE SID='*';

Bounce the Primary database now and make sure there is no lag in DGMGRL.

[oracle@sajidserver01 ~]$ srvctl stop database -d sajid_pittsburgh

[oracle@sajidserver01 ~]$ srvctl start database -d sajid_pittsburgh

SQL> SELECT value FROM v$parameter WHERE name = 'compatible';

VALUE

-------------------------------------------------------------

19.0.0.0

DGMGRL> show configuration;

Configuration - SAJID_CONF

Protection Mode: MaxPerformance

Members:

sajid_pittsburgh - Primary database

sajid_texas - Physical standby database

Fast-Start Failover: Disabled

Configuration Status:

SUCCESS (status updated 50 seconds ago)

Friday, March 17, 2023

ORA-28017: The password file is in the legacy format.

If you’ve ever encountered the ORA-28017: The password file is in the legacy format error, you know how frustrating it can be to solve. This Oracle database error occurs when a user attempts to connect using an old version of the Oracle Database that uses a pre-12c password file. In this blog post, we will discuss what this error means and provide steps for resolving it.

The first step in resolving the ORA-28017: The Password File Is In Legacy Format Error is understanding why it occurred in the first place. As mentioned earlier, this issue typically arises when users attempt to connect with an older version of Oracle Database that uses a pre-12c password file format instead of its current 12c secure file format (also known as SYSKM). When attempting such connections with these outdated versions, they may encounter errors like “ORA 28017” or “Password File Is In Legacy Format".

To resolve this issue quickly and easily without having to upgrade your entire system or reinstall software packages, we suggest following these steps :

- Check if there are any existing legacy Password files on your server by running "ls -ltr" command which lists all files & directories present under the root directory. If yes, then delete them immediately using rm -rf command followed by the filename.

- Create new secure file-based passwords using "orapwd" utility provided by default within ORACLE_HOME/bin directory. Please refer to DOC ID 2112456 for more details about creating secure files based passwords & related troubleshooting tips.

SQL> create user asmsnmp identified by <password>;

create user asmsnmp identified by <password>

*

Error at line 1:

ORA-28017: The password file is in the legacy format.

Check the output srvctl config ASM

[grid@sajidahmed ~]$ srvctl config ASM

Password file: orapwASM

Backup of Password file: <Location>

ASM listener: LISTENER

ASM instance count: 2

Cluster ASM listener: ASMNETLSNR

sqlplus / as sysasm

SQl> alter system flush passwordfile_metadata_cache;

SQL> select * from v$pwfile_users;

USERNAME SYSDB SYSOPER SYSASM SYSBACKUP SYSDG SYSKM CON_ID

--------------- ----- ----- ----- ----- ----- ---

SYS TRUE TRUE FALSE FALSE FALSE FALSE 0

Now create the asmsnmp user and grant it privileges, this issue will be resolved.

[grid@sajidahmed ~]$ asmcmd orapwusr --add ASMSNMP

[grid@sajidahmed ~]$ asmcmd orapwusr --grant sysasm ASMSNMP

[grid@sajidahmed ~]$ sqlplus asmsnmp/<password> as sysasm

SQL*Plus: Release 19.0.0.0.0 - test on Fri Mar 17 13:31:06 2023

Version 19.18.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.18.0.0.0

SQL> show user;

USER is "ASMSNMP"

By following these simple steps above, one should be able to resolve their issues related to "ORA 28017: The Password File Is In Legacy Format" errors very quickly without much effort!

Wednesday, February 8, 2023

Data Migration in AWS, GCP, AZURE, and OCC

- Data Migration: What Is It?

- Many databases and data kinds

- Naming Conventions for data migration technologies used in AWS, GCP, AZURE, and OCC

- Data migration tools and strategies from on-premises to cloud services like AWS, GCP, and OCC

- Advantages of both on-premises and cloud databases

- Conclusion

- AWS Data Migration Service (DMS): A fully managed service that makes it easy to migrate data to and from various databases, data warehouses, and data lakes.

- AWS Schema Conversion Tool (SCT): A tool that helps convert database schema and stored procedures to be compatible with the target database engine.

- AWS Database Migration Service (DMS) and AWS SCT can be used together to migrate data and schema both.

- Google Cloud Storage Transfer Service: A fully managed service that allows you to transfer large data sets from on-premises storage to Cloud Storage.

- Google Cloud Storage Nearline: A storage service that stores data at a lower cost but with a slightly longer retrieval time.

- Google Cloud SQL: A fully-managed relational database service that makes it easy to set up, maintain, manage, and administer your relational databases on Google Cloud.

- Cloud Dataflow

- Cloud Dataproc

- Cloud SQL

- Cloud Spanner

- Azure Database Migration Service (DMS)

- Azure Data Factory

- Azure Data Lake Storage Gen1

- Azure Data Lake Storage Gen2

- Azure Databricks

- Azure Stream Analytics

- Oracle Cloud Infrastructure Data Transfer Appliance: A physical appliance that allows you to transfer large data sets from your on-premises data center to Oracle Cloud.

- Oracle Cloud Infrastructure FastConnect: A service that provides a dedicated, private connection between your on-premises data center and Oracle Cloud.

- Oracle Cloud Infrastructure File Transfer: A service that allows you to transfer files between your on-premises data center and Oracle Cloud.

- Data Pump

- Data Integrator

- Data Migration Assistant

- GoldenGate

- SQL Developer

- Identification of the data that needs to be migrated

- Planning for the migration, including assessing the data's size and complexity, determining the necessary resources, and developing a migration schedule

- Backup of the existing data

- Testing the migration process

- Execution of the migration

- Verification of the migrated data

- Switchover to the new cloud-based system

- Post-migration monitoring and maintenance

- It's important to note that the specifics of data migration to the cloud can vary depending on the specific cloud service provider and the type of data being migrated.